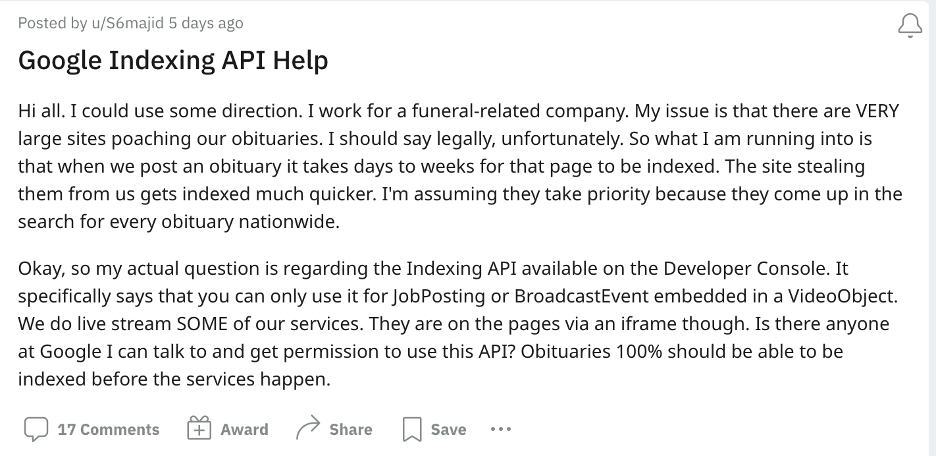

In the TechSEO Reddit community, a user has asked advice on how to get his pages indexed faster than other sites who poach their content. He asked if the use of Google Indexing API can help him with the issue.

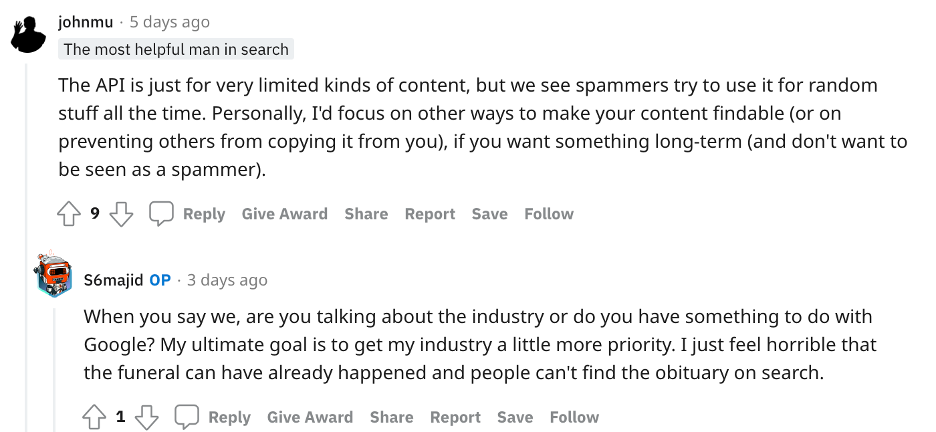

John Mueller chimed in and responded that the Indexing API is just for limited kinds of content, yet spammers still try to use it for random stuff all the time. He then advised to focus on other ways to make their content findable or to also prevent others from copying their content, as a long-term solution to the problem.

It seems like the original poster is quite clueless who Mueller is and asked if he has something to do with Google. Lol. Which Mueller responds to with “Yes, I have something to do with Google :-)”

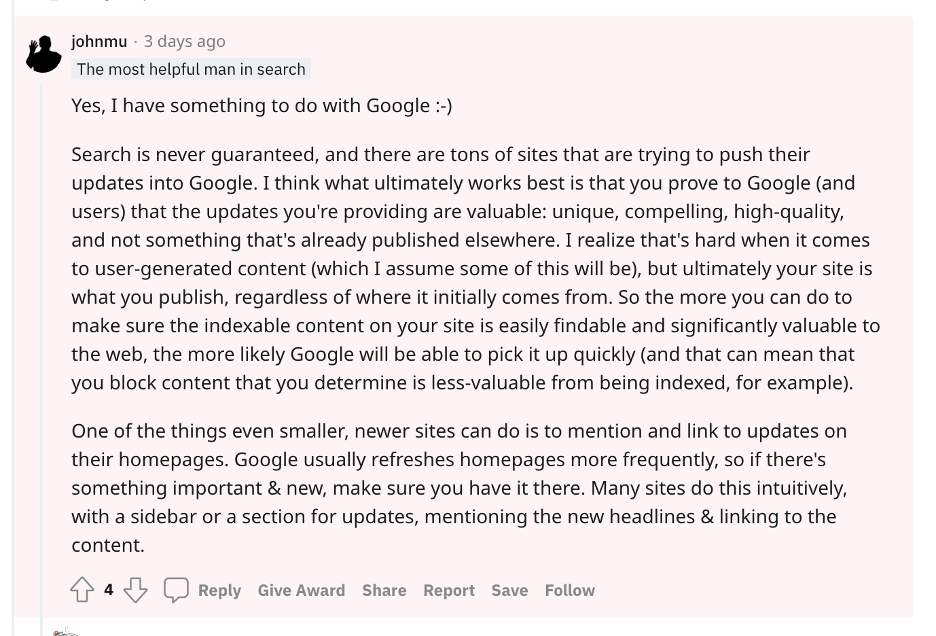

He also provided more advice on what the original poster could do to help his obituary site in their dilemma.

According to Mueller, search is never guaranteed and there are tons of sites that are trying to push their updates into Google. What ultimately works best is to prove to Google and users that the updates or content that the site has are valuable, compelling, high-quality, and not published elsewhere. While he realizes that this is hard when it comes to user-generated content, ultimately, your site is what you publish regardless of where it initially comes from.

In addition, the more easily findable the content is on the site, the more likely Google will be able to pick it up. He then added that linking to the content on the homepage as Google usually crawls homepages frequently. This can be done with a sidebar or section for new content mentioning the headline and linking to it. Aside from this, blocking content that is less-valuable from being indexed can also help.

The more one can do to make sure the content on the site is easily findable and significantly valuable, the more likely that Google will be able to pick it up quickly.

Based on his advice, creating valuable content on your site, building its authority, and making it look good in the eyes of Google and other users is really the best bet when it comes to beating sites, especially those who end up poaching content. There has been a lot of issues with copied content ranking better than the original content even way back. It’s always been an issue and it seems to still be going on. The question is, is this really enough, especially when the site copying or republishing the original content is a bigger site, more authoritative site (queue in news aggregator sites that get content from smaller sites)? What advice can you provide to smaller, less visible sites?

Check out the Reddit thread here.