Many automated software tools on the market help build social or web 2.0 backlinks to a page/site. Many of those tools even have the option to spin, but the tools themselves provide very little information about posting or not posting the exact same, identical content.

In this test, distinction is made that duplicate content is the same content on the same URL. The same content on two or more different sites is for our purposes, will be called syndicated content.

Press release publication is a form of syndicated content released through news channels. Doing a press release will often result in the same piece of content being published on hundreds of sites. Nevertheless, you will find that perhaps only a few of published instances are indexed. It has been discussed in multiple forums that Google considers some of them enough and the rest are excluded because they do not offer any additional information. Two other key points on press releases:

Assuming for the moment that press releases are “Google Safe”, what about doing your own syndication outside of news feeds and on Web 2.0 properties? Web 2.0 syndication is content that is either manually or automatically syndicated through the use of programs such as Syndwire, IFTTT, or Buffer, where the content is posted across multiple Web 2.0s with a link back to a target webpage containing the exact same content.

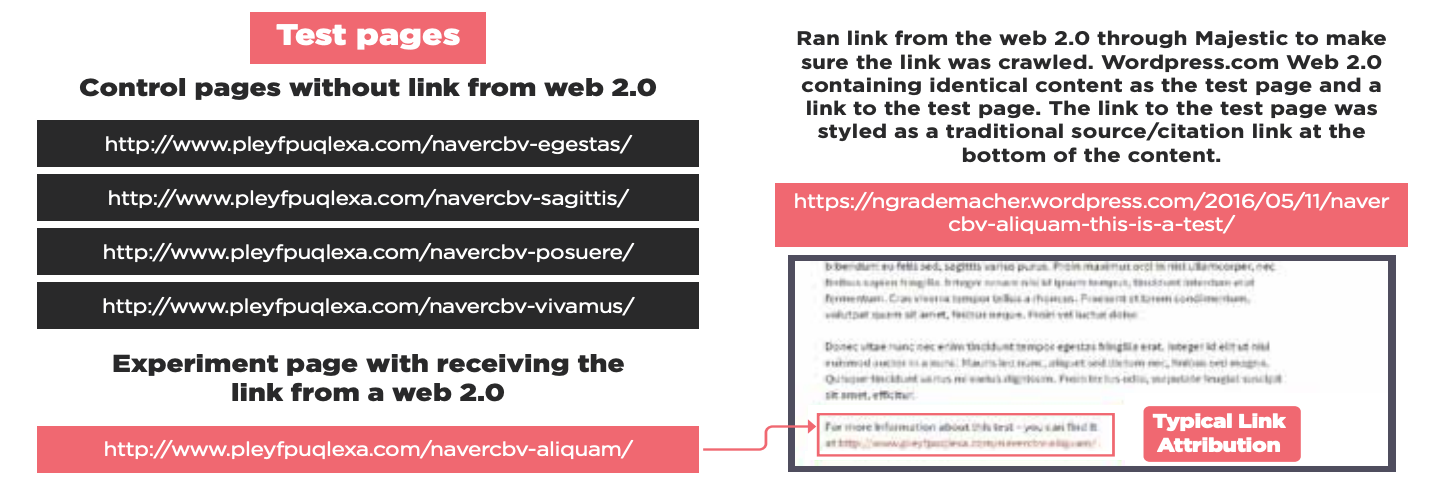

For this test, 5 pages were set up with 500 word articles and with <1% keyword density. The pages were published first, and then we determined which one is in the middle. All pages were published and submitted to Google at the same time. We created a post on a WordPress blog exact as the page that was ranking #2, to give it space to go up and down the results.

We ran the link from the Web 2.0 through Majestic to make sure the link was being crawled. WordPress.com Web 2.0 containing the identical content as the test page and a link to the test page. The link to the test page was styled as a traditional source/citation link at the bottom of the content.

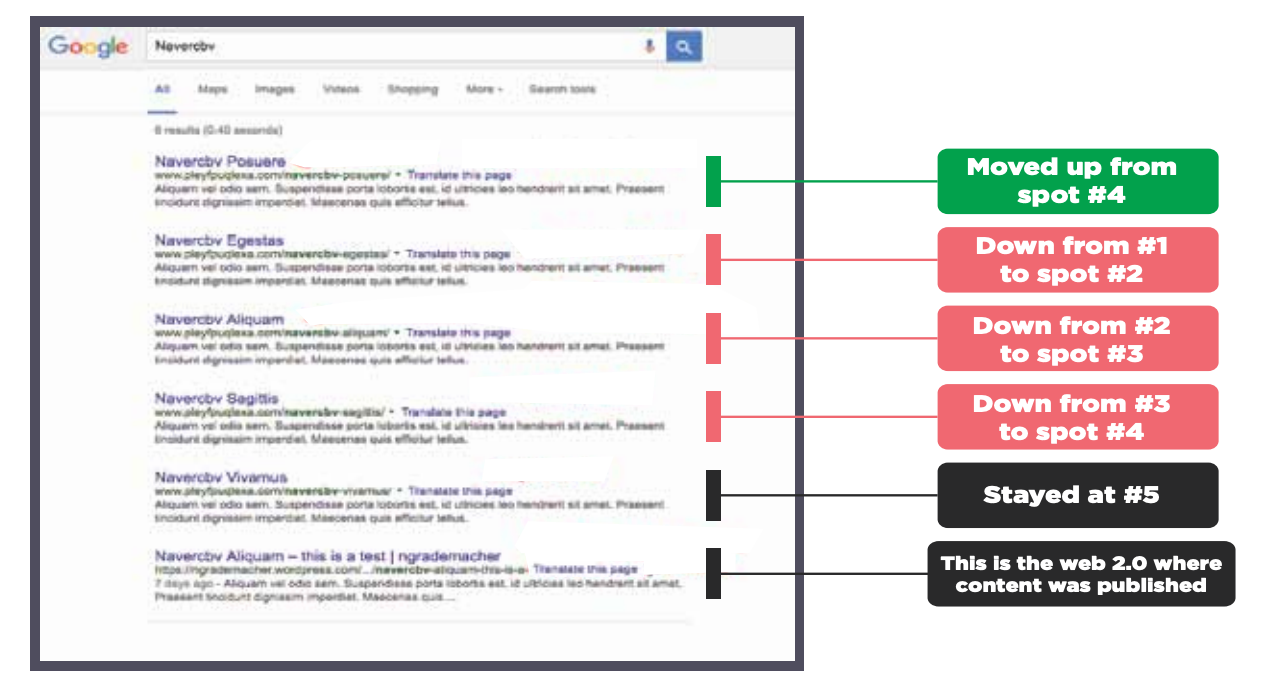

At first, the pages indexed and stayed in the order in the screenshot. A week later, the test page that had the web 2.0 duplicate content pointing back to the page dropped one slot, pushing the others down. Interestingly, the #4 spot jumped up to the #1 spot. We had no idea why. The #5 stayed the same.

It seems pretty clear that linking identical content to identical content is not a good idea. Once again, we have indicated that Google does not like linked duplicated/syndicated content that is posted outside of channels where identical content is expected.

In this video, Clint talks about this test and syndicating content.

Test number 27 – Web 2.0 Duplicate Content.

I want to do a couple things. First, we have to identify duplicate content and what is it. The idea behind duplicate content is having one piece, one web page, and then that web page is posted, or that article is posted on another website, and that article is posted on another website, etc.

Now, if it was a press release, that’d be syndication and no one would say anything about duplicate content, duplicate content hurts you, you shouldn’t use the same content everywhere. But press releases, apparently, are exempt from that because you’re actually not duplicating the content, you’re syndicating it. It doesn’t make any sense to me, because it’s the exact same content, and it’s over just on the press release sites versus a website.

But as you can see the natural form of the way Google works, even in Google News, they sort that out. And typically, the more the authoritative or trusted domains, that content will rank higher than, say, your standard off the mill news channel. That gets syndicated through an API.

Then you have the duplicate content that is on your web page or your website. And typically, you’re gonna see that mainly in a local business build especially if you’re targeting multiple cities. And frankly, Google kind of on to that. They know that that’s expected. So if you’re offering plumbing in Dallas, and you have a set of services that you offer, and you’re talking about that on your Dallas plumber page, and you also offer all of those same services in Houston, the algorithm has been trained to be smart enough to know that you’re not going to reinvent the wheel and write completely unique content for your Houston page, you’re offering the same exact thing. There’s only so many things and ways you can say about plumbing, right? So we get that.

This one comes through with actual web 2.0 syndication, so the creation of duplicate content on web 2.0 sites. The primary way a lot of this happens is one, you’re promoting your article and you’re using Syndwire, Onlywire, if this then that or one of those other link building things, and you have your RSS set up to show your full article. In so much as what happens when you post it, your full article goes to your RSS feed. If this, then, that, or whatever your tools, take that entire article, and then post that on your Blogger, or you post in on your WordPress, Tumblr, whatever. Wherever it gets posted, the exact copy of that page gets put on that other site.

The idea is actually very sound, you’re automatically building backlinks to your page. The issue that we want to test it or if it is an issue, okay, it is an issue – is that you’re copying your exact piece of content and putting it on these other sites and will it hurt you?

Now the results of this test is a week later after the test page web 2.0. The exact content pointed back to the link with the bat page dropped one slot pushing all the other ones down. And number four spot jumped to number one and number five stayed number five. So in blah, blah terms, because that’s really important to explain.

Linking identical content to identical content. Linking your copy to the original, is not a good idea. Google doesn’t like it when it’s linked that way and it creates duplicated syndicated content inside of the search results and you could end up getting your page deindexed because the site that you syndicated it to actually has more authority than your website or your web page.

Great example of this is if you’re actually creating a sister Google site to promote your stuff, and you copy your article to the Google site. The Google Site more than likely might probably outrank your homepage or your original piece of content because of the authority within the Google site. Google loves Google. Google’s gonna promote Google over your stuff and in so much as that’s typically how that works.

I’ve seen that tons of times like the IFTTT syndication method works. But it works a whole lot better when you’re actually using excerpts to your posts, and your RSS feed publishes the excerpts not duplicate content. In that way it works a whole lot better.

I have seen people who complain that IFTTT syndication does not work. And nine times out of 10, it’s always the fact that they are syndicating the entire full piece of content and creating duplicate content. And eventually, Google’s gonna filter something out. And typically, it’s going to be the weak link. And more often than not, your website’s going to be the weak link. So be careful with that.

Now, we are going to retest this,because hey, you never know, the kid came back. But just from recent experience, and recent tests that we published on SIA. In particular one today showed you how Google is filtering out the search results and this method here’s just another one of them. They’re not picking your content because it was the first one published in the first one index and you have the canonical, they’re actually picking based on authority.

We have more tests on this, such as a test on just having a blurb or description from the original content. Check out our test articles for more details.